|

|

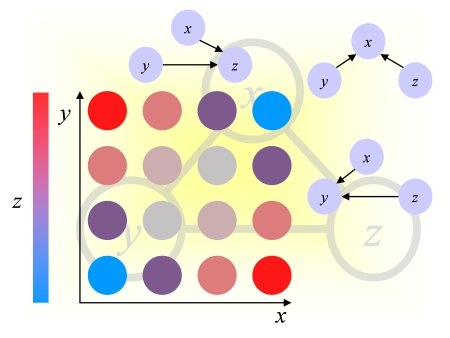

Modeling can have a number of objectives, including understanding or explaining data, developing scientific theories, and making predictions. We focus in this challenge on predictive modeling. The goal is to predict an outcome y given a number of predictor variables x=[x1, x2, … xn], also called features, attributes, or factors. For instance, in handwriting recognition, the predictor variables may indicate the presence of features such as loops or segments oriented in a certain direction, and the target variable or "label" can be a letter or a word to be recognized. In chemo-informatics, the predictors are descriptors of the molecular shape and the target indicates e.g., the activity of the molecule against a given disease. In text processing, the predictors may be simple frequencies of words and the target could be a document category such as "politics", "sports", "computers", etc.

Producing predictive models in the "supervised learning" setting requires a "training set" of labeled examples, i.e., examples x for which the target value or "label" y is known. Those are used to train the predictive model, which is then evaluated with new examples (test set) to estimate its "generalization performance".

In many applications, including handwriting recognition, chemo-informatics, and text processing, large amounts of unlabeled data are available at low cost (x only is known), but labeling examples (using a human expert to find the corresponding y value) is tedious and expensive. Hence there is a benefit to either use unlabeled data to improve the model in a semi-supervised learning algorithm, or to sample efficiently x-space to use human expertise for labeling only the most informative examples.

In this challenge, labels can only be obtained at a cost. In the regular machine learning setting (passive learning), a batch of training pairs {x, y} is made readily available (training set). In our active learning setting, the labels y are withheld and can be purchased for a certain amount of virtual cash. The learning machine may be used to select the examples, which look most promising to improve the predictive model. There exist several variants of active learning:

Stream-based active learning is also important when sensor data is continuously available and data cannot be easily stored. However, it is more difficult to evaluate in the context of a challenge. It is reasonable to assume that several of the techniques developed for pooled-based active learning will also be applicable to stream-based active learning.

We do not consider "de-novo" queries in this challenge either. The problem of de-novo queries is conceptually rather different because it involves human interventions on the system that may disrupt its normal functioning (interventions or manipulations). We will introduce such a setup in an upcoming challenge on experimental design.

A number of query strategies with various criteria of optimality have been devised. Perhaps the simplest and most commonly used query strategy is uncertainty sampling (Lewis and Gale, 1994). In this framework, an active learner queries the instances that it can label with least confidence. This of course requires the use of a model that is capable of assessing prediction uncertainty, such as a logistic model for binary classification problems. Another general active learning framework queries the labels of the instances that would impart the greatest change in the current model (expected model change), if we knew the labels. Since discriminative probabilistic models are usually trained with gradient-based optimization, the "change" imparted can be measured by the magnitude of the gradient (Settles et al. 2008).

A more theoretically motivated query strategy is query-by-committee (QBC - Seung et al, 1992). The QBC approach involves maintaining a committee of models, which are all trained on the current set of labeled samples, but represent competing hypotheses. Each committee member votes on the labels of query candidates and the query considered most informative is the one on which they disagree most. It can be shown that this is the query that potentially gives the largest reduction in the space of hypotheses (models) consistent with the current training dataset (version space). A related approach is Bayesian active learning. In the Bayesian setting, a prior over the space of hypotheses gets revised into a posterior after seeing data. Bayesian active learning algorithms, for instance, (Tong and Koller, 2000) maximize the expected Kullback-Leibler divergence between the revised posterior distribution (after learning with the new queried example) and the current posterior distribution given the data already seen. Hence this can be seen both as an extension of the expected model change framework for a Bayesian committee and a probabilistic reduction of hypothesis space.

A more direct criterion of optimality seeks queries that are expected to produce the greatest reduction in generalization error (expected error reduction). (Cohn et al., 1996) proposed the first statistical analyses of active learning, demonstrating how to synthesize queries that minimize the learner's future error by minimizing its variance. However, their approach applies only to regression tasks and synthesizes queries de novo. Another more direct, but very computationally expensive approach is to tentatively add to the training set all possible candidate queries with one of the opposite label and estimate how much generalization error reduction would result by adding it to the training set (Roy and McCallum, 2001).

It has been suggested that uncertainty sampling and QBC strategies are prone to querying outliers and therefore are not robust. The information density framework (Settles and Craven, 2008) addresses that problem by calling informative instances that are not only uncertain, but representative of the input distribution.

(Cohn et al, 1996) D. Cohn, Z. Ghahramani, and M.I. Jordan. Active learning with statistical models.

Journal of Artificial Intelligence Research, 4:129-145, 1996.

(Lewis and Gale, 1994) D. Lewis and W. Gale. A sequential algorithm for training text classifiers. In

Proceedings of the ACM SIGIR Conference on Research and Development in Information Retrieval, pages 3-12. ACM/Springer, 1994.

(Roy and McCallum, 2001) N. Roy and A. McCallum. Toward optimal active learning through sampling estimation of error reduction. In Proceedings of the International Conference on Machine Learning (ICML), pages 441-448. Morgan Kaufmann, 2001.

(Settles et al, 2008) B. Settles, M. Craven, and S. Ray. Multiple-instance active learning. In Advances

in Neural Information Processing Systems (NIPS), volume 20, pages 1289- 1296. MIT Press, 2008.

(Settles, 2009) Burr Settles. Active Learning Literature Survey. Computer Sciences Technical Report 1648, University of Wisconsin-Madison. 2009.

(Settles and Craven, 2008) B. Settles and M. Craven. An analysis of active learning strategies for sequence labeling tasks. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 1069-1078. ACL Press, 2008.

(Seung et al., 1992) H.S. Seung, M. Opper, and H. Sompolinsky. Query by committee. In Proceedings of the ACM Workshop on Computational Learning Theory, pages 287-294, 1992.

(Tong and Koller, 2000) Simon Tong, Daphne Koller: Active Learning for Parameter Estimation in Bayesian Networks. NIPS 2000: 647-653.