|

|

This challenge addresses machine learning problems in which labeling data is expensive, but large amounts of unlabeled data are available at low cost. Such problems might be tackled from different angles: learning from unlabeled data or active learning. In the former case, the algorithms must satisfy themselves with the limited amount of labeled data and capitalize on the unlabeled data with semi-supervised learning methods. In the latter case, the algorithms may place a limited number of queries to get labels. The goal in that case is to optimize the queries to label data and the problem is referred to as active learning.

| Registered | |

|---|---|

| Entrants | |

| Development experiments | |

| Final experiments |

Much of machine learning and data mining has been so far concentrating on analyzing data already collected, rather than collecting data. While experimental design is a well-developed discipline of statistics, data collection practitioners often neglect to apply its principled methods. As a result, data collected and made available to data analysts, in charge of explaining them and building predictive models, are not always of good quality and are plagued by experimental artifacts. In reaction to this situation, some researchers in machine learning and data mining have started to become interested in experimental design to close the gap between data acquisition or experimentation and model building. This has given rise of the discipline of active learning. In parallel, researchers in causal studies have started raising the awareness of the differences between passive observations, active sampling, and interventions. In this domain, only interventions qualify as true experiments capable of unraveling cause-effect relationships. However, most practical experimental designs start with sampling data in a way to minimize the number of necessary interventions.

The Causality Workbench will propose in the next few month several challenges to evaluate methods of active learning and experimental design, which involve the data analyst in the process of data collection. From our perspective, to build good models, we need good data. However, collecting good data comes at a price. Interventions are usually expensive to perform and sometimes unethical or impossible, while observational data are available in abundance at a low cost. Practitioners must identify strategies for collecting data, which are cost effective and feasible, resulting in the best possible models at the lowest possible price. Hence, both efficiency and efficacy are factors of evaluation in these evaluations.

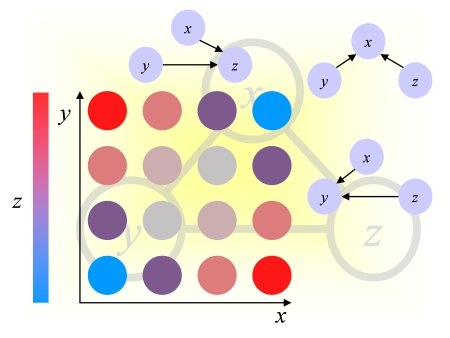

The setup of this first challenge represented in the figure above considers only sampling as an intervention of the data analyst or the learning machine, who may only place queries on the target values (labels) y. So-called "de novo queries" in which new patterns x can be created are not considered. We will consider them in an upcoming challenge on experimental design in causal discovery.

In this challenge, we propose several tasks of pool-based active learning in which a large unlabeled dataset is available from the onset of the challenge and the participants can place queries to acquire data for some amount of virtual cash. The participants will need to return prediction values for all the labels every time they want to purchase new labels. This will allow us to draw learning curves prediction performance vs. amount of virtual cash spend. The participants will be judged according to the area under the learning curves, forcing them to optimize both efficacy (obtain good prediction performance) and efficiency (spend little virtual cash).

Learn more about active learning...