|

|

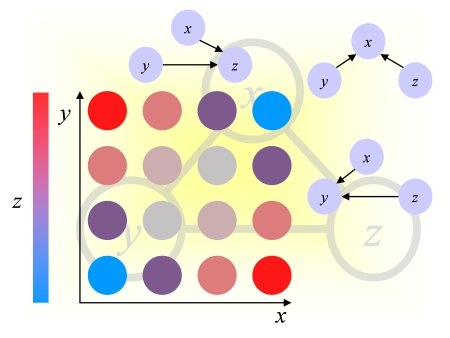

One important goal of causal modeling is to unravel enough of the data generating process to be able to predict the consequences of actions (also called interventions, experiments or manipulations), performed by an external agent. This setup violates the classical i.i.d. assumption commonly made in machine learning. For instance, in policy-making, one may want to predict "the effect on a population health status" of "forbidding to smoke in public places", before passing a law.

While many algorithms have been proposed recently to learn causal relationships from non-experimental data (simple observations without external intervention), experimentation is still considered the only ultimate means of validation of a causal model. But, experiments are often costly and sometimes impossible or unethical to perform.

The virtual lab offers the possibility of researchers to experiment with simulated systems (for a list, see the Index page), by setting the values of certain variables and observing others. It features a realistic setup in which the artificial systems model real applications in medicine, marketing, life sciences, social sciences, etc. and the experiments cost a certain amount of virtual cash; simple observations without intervention generally costing less. The predictive power of the model can then be evaluated by providing test data corresponding to new interventions.

To understand the basic concept of experimentation in causal modeling, you may want to first look at these historical examples in epidemiology brought to us by ThinkQuest:

| Lung cancer |

|---|

| Smallpox |

|---|

| Food poisoning |

|---|

zip query.zip *or

tar cvf query.tar *; gzip query.tarto create valid archives. We provide several examples of queries for the LUCAS model:

| Filename | Non-experimental data | Experimental data | Survey data | Prediction results | Description | File Format |

|---|---|---|---|---|---|---|

| [submission].query |

Compulsory (TRAIN, TEST [n] or OBS

[num]) |

Compulsory (EXP) |

Optional (SURVEY) |

Optional (PREDICT [n]) |

Type of query. |

A single key word on the first line, optionally followed

by a number on the same line: TRAIN: get the default training set. TEST [n]; replace [n] by 1, 2, 3 to get the nth test set TEST=TEST 1. OBS [num]: get observational data; replace [num] by the number of samples requested. EXP: get experimental data (the number of samples is determined by the number of lines in [submission].sample and [submission].manipval). SURVEY: get training labels. PREDICT [n]: replace [n] by 1, 2, 3 to indicate that predictions correspond to the nth test set. PREDICT=PREDICT 1. |

| [submission].sample |

NA |

Optional |

Compulsory |

NA |

Sample ID in the default training set. The corresponding

samples are used to set premanipulation values. |

A list of sample numbers, one per line (the numbering

is 1-based and corresponds to lines in the training data). |

| [submission].premanipvar |

Optional |

Optional |

Optional |

NA |

List of the pre-manipulation variables (observed

before or without experimentation). By default (no file given): (1) for non-experimental

and experimental data: all the observable variables, except the target; (2)

for survey data: the target. |

A space-delimited list of variable numbers on the first line of the file. All variables are numbered from 1 to the maximum number of visible variables, except the target variable (if any), which is numbered 0. |

| [submission].manipvar |

NA | Compulsory |

NA |

NA |

List of the variables to be manipulated (clamped). | |

| [submission].postmanipvar |

NA |

Compulsory |

NA |

Optional |

List of the post-manipulation variables (observed

after experimentation). By default: the target variable. |

|

| [submission].premanipval |

NA |

NA |

NA |

NA |

Not applicable: use [submission].sample to initialize

values of the pre-manipulation variables. |

Each line corresponds to

an instance (sample) and should contain space delimited variable values for

all the variables of that instance. Use NaN if the value is missing or omitted. The number of lines in [submission].manipval should match the number of samples in [submission].sample (if provided). You may omit [submission].query and provide [submission].predict instead of [submission].postmanipval if there is a single test set and no experiments are involved. |

| [submission].manipval |

NA |

Compulsory |

NA |

NA |

Clamped values for the manipulated variables,

listed in [submission].manipvar. |

|

| [submission].postmanipval or [submission].predict |

NA |

NA |

NA |

Compulsory |

Predictions values for all the samples of TESTn. |

| Filename | Non-experimental training data | Survey data | Experimental training data | Test data | Evaluation score | Description | File Format |

|---|---|---|---|---|---|---|---|

| [submission].query |

Optional (TRAIN or OBS) |

Optional SURVEY |

Optional EXP |

Optional TEST |

Optional PREDICT |

Type of query. |

One keyword and optionally a number on the first

line (copied from the query submitted). |

| [answer].premanipvar |

Optional |

NA |

Present if requested |

Present |

NA |

List of the pre-manipulation variables. By default,

all the observable variables except the target. |

A space-delimited list of variable numbers on the first line of the file. All variables are numbered from 1 to the maximum number of visible variables, except the default target variable (if any), which is numbered 0. If the file is missing or empty, an empty list is assumed. |

| [answer].manipvar |

NA | NA |

Present |

Optional |

NA |

List of the variables to be manipulated (clamped). | |

| [answer].postmanipvar |

NA |

NA |

Optional |

Optional |

NA |

List of the post-manipulation variables. By default:

the target variable 0. |

|

| [answer].premanipval or [answer].data |

Present |

NA |

Present if requested |

Present |

NA |

Pre-manipulation values. |

Each line corresponds

to an instance (sample) and contains space delimited variable values for all

the variables of that instance (or a single target value for [answer].label

files). [answer].data files contain unlabeled default training data for problems

without experimentation |

| [answer].label |

NA (to get the target variable values,

use the index 0) |

Present |

NA (to get the target variable values,

use the index 0) |

NA |

NA |

Target values for default trainign examples. Equivalent

to [answer].premanipval when [answer].premanipvar (with the single value

0) is omitted. |

|

| [answer].manipval |

NA |

NA |

Present |

Optional |

NA |

Clamped values for the manipulated variables,

listed in [answer].manipvar. Those correspond to manipulations performed

by the organizers so they are free of charge. |

|

| [answer].postmanipval |

NA |

NA |

Present |

Hidden to the participants |

NA |

Post-manipulation values. In answer to [submission].postmanipvar |

|

| [answer].is_overbudget |

Optional |

Optional |

Optional |

Optional |

Optional |

File indicating that the budget was overspent and

the query was not processed. |

The value1. |

| [answer].score |

NA |

NA |

NA |

NA |

Present |

Prediction score. |

A numeric value. |

| [answer].ebar |

NA |

NA |

NA |

NA |

Present |

Error bar. |

A numeric value. |

| [answer].varnum |

Present |

Present |

Present |

Present |

NA |

The total number of observable variables (excluding

the target). |

A numeric value. |

| [answer].samplenum |

Present |

Present |

Present |

Present |

NA |

Number of samples requested. |

A numeric value. |

| [answer].obsernum |

Present |

Present |

Present |

Present |

NA |

Number of variable values observed (including the

target). |

A numeric value. |

| [answer].manipnum |

Present |

Present |

Present |

Present |

NA |

Number of values manipulated. |

A numeric value. |

| [answer].targetnum |

Present |

Present |

Present |

Present |

NA |

Number of target values observed. |

A numeric value. |

| [answer].samplecost |

Present |

Present |

Present |

Present |

NA |

Cost for the samples requested (labeled samples

may cost more than unlabeled samples). |

A numeric value. |

| [answer].obsercost |

Present |

Present |

Present |

Present |

NA |

Cost for the observations made. |

A numeric value. |

| [answer].manipcost |

Present |

Present |

Present |

Present |

NA |

Cost for the manipulations made. |

A numeric value. |

| [answer].targetcost |

Present |

Present |

Present |

Present |

NA |

Additional cost for target observations. |

A numeric value. |

| [answer].totalcost |

Present |

Present |

Present |

Present |

NA |

Total cost. |

A numeric value. |